Linguistic profiles of different GPT models

Linguistic profiles of different GPT models

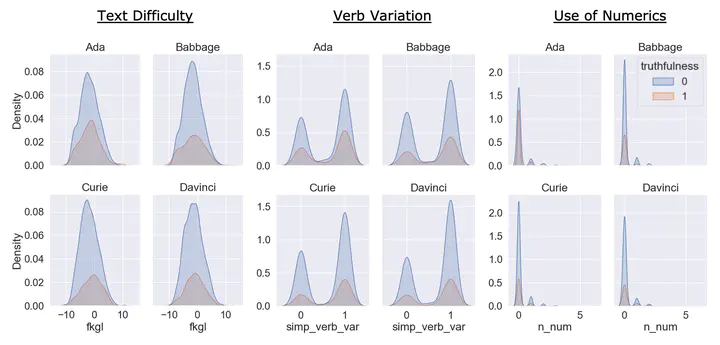

- We investigate the phenomenon of an LLM’s untruthful response using a large set of 220 handcrafted linguistic features. We focus on GPT-3 models and find that the linguistic profiles of responses are similar across model sizes.

- That is, how varying-sized LLMs respond to given prompts stays similar on the linguistic properties level.

- We expand upon this finding by training support vector machines that rely only upon the stylistic components of model responses to classify the truthfulness of statements.

- Though the dataset size limits our current findings, we present promising evidence that truthfulness detection is possible without evaluating the content itself.

- This paper was accepted at TrustNLP @ ACL 2023